Many large industrial IoT deployments do not scale efficiently on traditional centralized cloud computing models. The massive number of connected devices sending endless streams of data changes where computing must be done: the network edge.

Many large industrial IoT deployments do not scale efficiently on traditional centralized cloud computing models. The massive number of connected devices sending endless streams of data changes where computing must be done: the network edge.

Though dedicated data centers are more powerful than network edge appliances constrained by size/space, the limitations imposed by the speed of light hinder long-distance low-latency applications. This and the immense volume of IoT devices that need to connect to the network make relying on centralized resources like cloud platforms costly and inefficient.

Challenges in Industrial IoT

Most industries make extensive use of IoT technologies (in large part for massive sensory networks), and employ applications that are latency-sensitive and require powerful computation to implement.

Oilrigs for example generate nearly 100 GB of data a day, and turbines can generate an impressive TB of sensory data every hour with many more industrial IoT sensor applications generating comparative amounts of data. It is simply not practical, cost-effective or even necessary to send all of this data to the cloud when many of the critical analytics processes don’t require cloud-level computing.

IoT devices generate massive amounts of useful data, but at the same time generate large amounts of useless information. All of this leaves a nice margin for optimization (noise filtering, data compression to name a few) with network edge appliances.

Edge Computing / Fog Computing Powered Analytics

The fundamental nature of latency/speed of light, as well as the bandwidth requirements for the myriad of IoT devices, make moving computing resources closer to the source the only option.

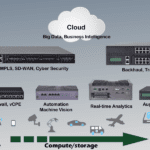

For immense IoT deployments this means instead of using low-powered IoT gateways with minimal storage/computing capabilities, mid-to-high-end computing hardware & storage is used to deploy IoT network computing appliances. With a fog/edge computing network architecture, instead of having to rely on far-away datacenters, IoT devices can utilize the network edge appliances themselves as a powerful resource(which in turn can communicate with datacenters for more complex orchestration/computation).

With powerful Intel x86 appliances at the network edge serving to collect, process and store the data, pushing analytics to the edge is becoming the next step in many industries. Running analytics running closer to the source opens the door to new applications and brings incredible benefits in:

- Optimization/Efficiency: Bandwidth and storage are a valuable and limited resource, exacerbated by the fact that innumerable IoT devices generate never-ending amounts of it, this makes sending raw unprocessed data prohibitively expensive on the infrastructure side. With powerful computing appliances at the network edge capable of amassing, storing, processing, analyzing and compressing data locally, IoT deployments can maximized internet bandwidth efficiency and minimize round-trips to the datacenter.

- Redundancy/Resiliency: The datacenters role is not completely eliminated in a decentralized computing model, instead it’s reserved for complex queries, orchestration & storage. This opens up the possibility for intelligently programmed gateways capable of localized decision making with continued operation in-case of a datacenter outage.

- Low-latency Supremacy: The benefit of not having to phone home (to the cloud/datacenter) every-time an IoT device wants to store data or requires orchestration is a massive load off of the core network and an improvement to overall latency.