Virtual machines today are the de facto standard for deploying software, but it is not the only technology capable of fulfilling this niche. Containers, a technology that essentially isolates applications from the host operating systems (much like a virtual machine) is quickly becoming a viable alternative for many software deployment scenarios. Though the technologies share many similarities in end functionality, containers do offer some advantages as well as disadvantages to virtual machines.

Containers and virtual machines: what’s the difference?

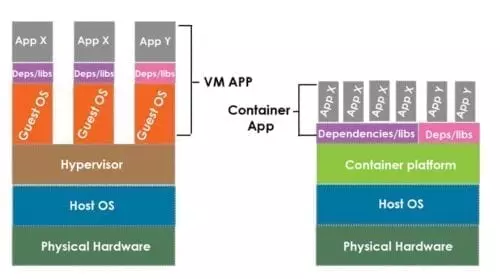

Shown: comparison of a container and virtual machine architecture running X and Y apps

Though both technologies have the same goal: isolating an application from other processes and applications on the host system, they both take fairly different approaches.

Virtual machines: As the name suggests, this approach is much more involved in scope. It relies on a Hypervisor (e.g. KVM, XEN) which emulates an entire physical machine, assigns a desired amount of system memory, processor cores and other resources such as disk storage, networking, PCI addons and so forth.

Containers: Container-like technologies have existed for a long time, though under different names: jails, sandboxes, etc. Its only fairy recent that the technology has matured enough and made headway into production environments. Containers essentially isolate an application from the host through various techniques, but utilize the same host systems kernel, processes (e.g. network stack) to run application or VNFs.

What does that this mean for performance, security and portability?

Virtualization technologies and techniques have come a long way for both software and hardware. Most x86 processors manufactured from 2013 onwards include virtualization-specific optimizations (Intel VT-x, AMD-V) which bring virtualization overhead penalties on the processor to around 2%, a more than fair trade-off for the functionality virtualization brings. The same cannot be said of other resources such as system memory and storage. Since a virtual machine runs an entire operating system on top of the host operating system, it is inherently more inefficient in terms of application size and system memory usage. Virtualization not only consume more system memory, it requires a fixed amount to be allocated to the VM, even if the application is not consuming those resources. With all that taken into account system memory usage might end up being the most important difference between virtualization and containers.

One of the true advantages of VM’s over containers is their portability. Although docker containers offer a certain degree of portability between host operating system by packaging dependencies with the application, there’s no guarantee the underlying host OS is compatible with XYZ container application. Another advantage is the maturity of Virtual machine management solutions, though Kubernetes is steadily closing this gap.

Containers are often seen as more efficient than virtualization by design, because instead of duplicating processes and services available on the host operating system inside OS, applications are run in sandboxed environments within the host OS, removing layers of abstraction, essentially running applications on bare-metal. Though not untrue, Docker (the leading container project) does not come without its own performance hits. For example: Dockers network access translation does introduce overhead that can impact performance in high workloads.

Considering the low overhead of modern hypervisors, the real efficiency in containers comes from reduced memory usage from the elimination of the guest OS, the subsequent de-duplication of processes which consume additional resources and the reduction in application size from aforementioned reductions. Combine that with the ability to manage resources like system memory on the-fly and dynamically, it could make for a much more efficient deployment option.

Another promising characteristic of container is the startup time. Since the application doesn’t have to initiate an entire guest OS before launching, it’s a much more agile deployment platform which could potentially drive adoption in areas like 5G network slicing.

When it comes to security, both technologies can suffer from the same Host OS, library or application vulnerabilities, though the attack surface is reduced by quite a bit for containers as an additional Guest OS is not necessary. At the same time hypervisors are more mature and as such currently offer a more transparent view of running processes. Only time will tell which technology can provide the most secure system.

Conclusion

Both technologies offer distinct advantages:

Virtualization comes with a plethora of time-tested tools, management and orchestration platforms, virtual probes, hyper-converged virtual infrastructure solutions and much more. Portability and interoperability are the characteristics that stand out when compared to containers.

Containers offer increased resource efficiency and service agility. While it might not seem like much it opens the door for a microservices model which can scale faster and more efficiently. On paper containers fit more in line with NFV/SDN initiatives and the industry has taken notice as Kubernetes is one of the fastest growing open source projects to date.

Today service providers are in the midst of a network evolution and seek to use the best technology available, and to that end many are running containers inside a virtual machine to take advantage of the superior tools and infrastructure management solutions available today. Though this eliminates some of the benefits of containers, it allows service providers to leverage the agility and memory efficiency of containers to mitigate the inefficiencies present in virtual machines and gives the best of both worlds. Eventually improved interoperability and standardized API’s may allow containers and virtual machines to work together and create the ideal software deployment solution for SDN/NFV.