Over the past few years, artificial intelligence and machine learning have slowly become two of the hottest buzzwords in the tech industries due to fast increasing interest and research going into the two subjects. While they are closely related, artificial intelligence (AI) and machine learning (ML) are two different technologies. AI being the more commonly known of the two due to its popularity among sci-fi writers and film directors over the years. While some of Hollywood’s AI representations were loosely based in real science (Ex Machina and Colossus: The Forbin Project, for example) others were pure technological fantasy and painted a somewhat misleading picture about Artificial Intelligence. Far from the civilization threatening AIs of many a Hollywood blockbuster, most current AI technologies are used to protect businesses and individuals from targeted and mass cyber attacks. Machine learning, however, is a little lesser known, more than likely due to it being a more recent real-world development. But how does it work?

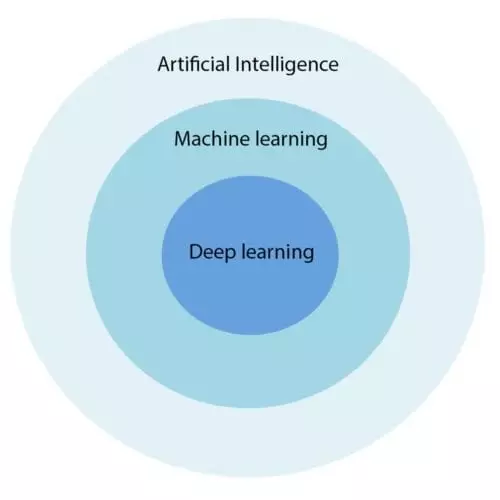

Relation between AI, ML and DL

Machine Learning and Artificial Intelligence are two inter-related technologies. Artificial Intelligence is the broad concept of adding logic or rule-based intelligence into machines to make decisions autonomously.

AI’s are usually broken down into two categories, Narrow (or Applied) and General AI’s:

Narrow or Applied AI is non-sentient artificial intelligence that focus on one dedicated task. Driverless vehicles are one example. Popular smartphone assistant Siri, intelligent stock trading systems are other examples of Applied AI.

These are some groundbreaking technologies, however, General AI is where the real excitement of Artificial Intelligence lies.

General AI is adding the cognitive capabilities to the machines to learn on their own. In a way which is not too different from that of a human’s. Machine Learning is essentially an outcome of developments in General AI technologies.

Machine learning is a form of AI that gives machine access to Big Data and uses algorithms to enable the machine to “learn” and analyze the data in order to predict an output value that falls within an acceptable range and, in an unsupervised system, without being explicitly programmed to do so.

Machine learning algorithms are usually labelled as either supervised or unsupervised, supervised systems needing external inputs and outputs from human programmers and unsupervised systems using an approach called deep neural networking or Deep Learning (DL) to emulate the hierarchical way human minds learn without the need for human inputs. In order to be classified as a general or strong AI, and artificial intelligence would have to have some form of machine learning system, most likely Deep Learning, in order to perform equal to or surpass human cognitive abilities.

How is AI being used now?

At this moment in time, there are thousands of AI systems currently in use across the globe. From events and cyber security to healthcare and smart cars, as we continue to integrate and connect our technologies, the case for employing AI and machine learning systems continues to grow. Below are a few examples of where and how artificial intelligence and machine learning have already been implemented in order to protect and enhance both our everyday lives and our business and activities online. Below are some of the common examples of AI and ML in use today.

Security: Whether it be events security at your favorite summer festivals or protecting your businesses network infrastructure through cyber security, artificial intelligence is in use in hundreds of security systems around the world. Using machine learning algorithms, AI security systems can use pattern recognition and data access information to pick up network intruders or predict possible security breaches. These systems can also spot things their human counterparts may find difficult to detect, helping to further improve accuracy and speed up security processes.

Healthcare: AI systems are currently being used to identify and predict illnesses quicker using greater processing power than the average human with innovations such as Computer Assisted Diagnosis (CAD) being used in hospitals and research centers in several countries. AI systems are also being used in healthcare assistant apps similar to the general assistant apps Siri and Cortana. These apps provide medication alerts for patients as well as educational material on health and human-like interactions that can be used to gauge the user’s current mental state.

Finance: Using proprietary AI systems to predict and execute trades has become the norm in many of the larger trading firms. Being able to predict markets to some degree of accuracy is obviously a desirable trait of any trading system, however, with machine learning algorithms continuously being improved upon, the margins of error these systems adhere to look set to get smaller and smaller.

Marketing: As well as financial firms being quick to capitalize on artificial intelligence and machine learning, advertisers and retail organizations have also begun to apply these technologies to their operations. By using AI and machine learning to collect and analyze big data, these organizations are able to understand the purchasing patterns of their customers as well as what they like and dislike and tailor their adverts and marketing efforts to better suit the tastes of their target markets.

Search Engines: Search engines such as Google and Bing are possibly the most well known use of artificial intelligence and machine learning. By using AI and ML to understand how you search for information and how you react to the results provided, search engines can then be improved upon to deliver better, more accurate results to your next search query. By taking into account where you click and whether you refine or change your search, search engine AIs can work out how well they did in answering your searches.

Artificial Intelligence and Virtual Reality

AI and VR technologies have greater synergies that what is in common knowledge today. AI is essentially a prerequisite for establishing Virtual Reality or Augmented Reality. A good example of AI/Machine Learning and VR solving a real world problem is use of Medical Imaging and Big Data to predict cancer in a human body. Take Facebook for another example, it is already planning to add Artificial Intelligence to its platform to understand and describe images, object and speech, besides offering their VR application of 360 degree videos.

AI and VR technologies have greater synergies that what is in common knowledge today. AI is essentially a prerequisite for establishing Virtual Reality or Augmented Reality. A good example of AI/Machine Learning and VR solving a real world problem is use of Medical Imaging and Big Data to predict cancer in a human body. Take Facebook for another example, it is already planning to add Artificial Intelligence to its platform to understand and describe images, object and speech, besides offering their VR application of 360 degree videos.

Challenge for existing networks

Service providers are working day and night to upgrade their networks to provide the cloud networks the infrastructure to deliver AI and VR applications over the cloud. There are a number of initiatives going on in the Telecom sector ecosystem who are developing open standards based NFV, SDN and Multi-access Edge Computing solutions to offer low latency, higher speed and more bandwidth. Currently the computing will have to be kept on the edge to avoid latency issues related with cloud network. For example, in case of a visual based machine learning application use of high performance Graphic Processing Unit based computing hardware will be a basic requirement.

So, as we’ve now seen, artificial intelligence and machine learning are already being used for multiple applications in a variety of different industries. As the Internet of Things expands and incorporates more and more everyday devices, we’ll need to ensure that our cyber security systems are up to the challenge. In order to do this, various steps will have to be taken to ensure that not only is AI and ML use maximized in applications that stand to gain the most from it, but that the technology itself doesn’t become the very thing we need to protect against.

AI and ML In The Future

If we look back at our technological history, we can see that as we’ve become more adept at creating and then improving our inventions, the speed at which we innovate seems to have increased dramatically. One eye-opening statistic is that, after the Wright brothers first manned flight of a working airplane in 1903, it took less than 50 years for that invention to then be used to drop the atomic bomb. The fact that there are less than 50 years between the man’s first flight and the splitting of the atom should enable us to see just how quickly we are creating new and terrifying technologies. This is a cause for concern when we consider artificial intelligence and machine learning. When we’re dealing with inventing ways for machines to learn and eventually think for themselves, we cannot be blinded by competition or the potential for profit as to do so could bring about unforeseen and irreversible consequences.